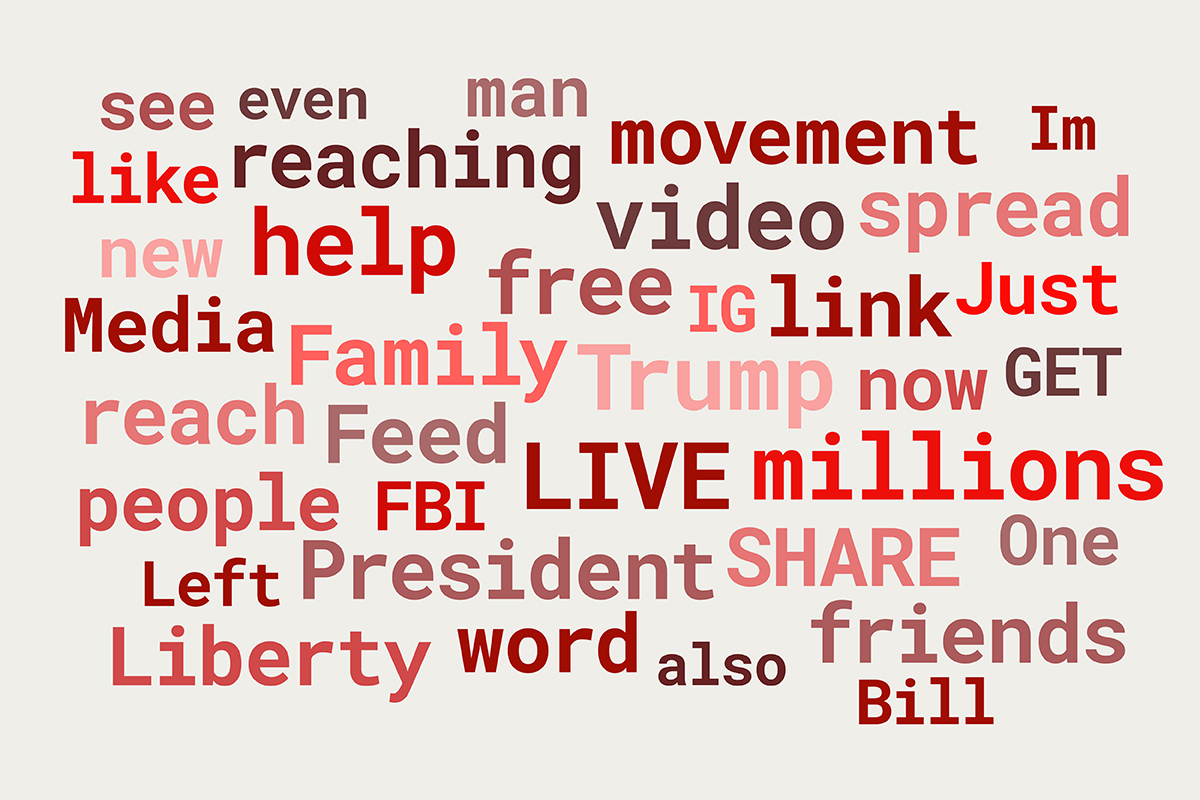

CHARLOTTESVILLE, Va. — On Twitter, David Duke, former Grand Wizard of the Ku Klux Klan, sometimes tweets more than 30 times a day to nearly 50,000 followers, recently calling for the “chasing down” of specific black Americans and claiming the LGBTQ community is in need of “intensive psychiatric treatment.”

On Facebook, James Allsup, a right-wing advocate, posted a photo comparing migrant children at the border to Jewish people behind a fence during the Holocaust with the caption, “They present it like it’s a bad thing #BuildTheWall.”

On Gab, a censorship-free alternative to Twitter, former 2018 candidate for U.S. Senate Patrick Little, claims ovens are a means of preserving the Aryan race. And Billy Roper, a well-known voice of neo-Nazism, posts “Let God Burn Them” as an acronym for Lesbian Gay Bisexual Transgender.

Facebook, Twitter and other social media companies offer billions of people unparalleled access to the world. Users are able to tweet at the president of the United States, foster support for such social movements as Black Lives Matter or inspire thousands to march with a simple hashtag.

“What social media does is it allows people to find each other and establish digital communities and relationships,” said Benjamin Lee, senior research associate for the Centre for Research and Evidence on Security Threats. “Not to say that extreme sentiment is growing or not, but it is a lot more visible.”

Social media also allows something else: a largely uncensored collection of public opinion and calls to action, including acts of violence, hatred and bigotry.

Months before the violent 2017 Unite the Right rally in Charlottesville, Virginia, people associated with the far-right movement used the online chat room Discord to encourage like-minded users to protest the city’s efforts to remove long-standing Confederate statues — particularly one of Gen. Robert E. Lee.

Discord originally was a chat space for the online gaming community, but some participants used the platform to discuss weapons they might brandish at the Charlottesville rally. Some discussed guns and shields, and one suggested putting a “6-8 inch double-threaded screw” into an ax handle.

Multiple posts discussed the logistics of running a vehicle into the expected crowds of counterprotesters.